This is a newly installed Sentry instance, whose life is less than 90 days.

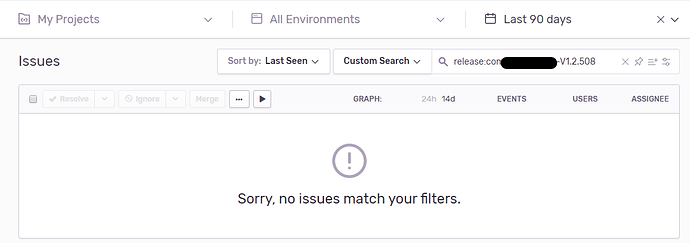

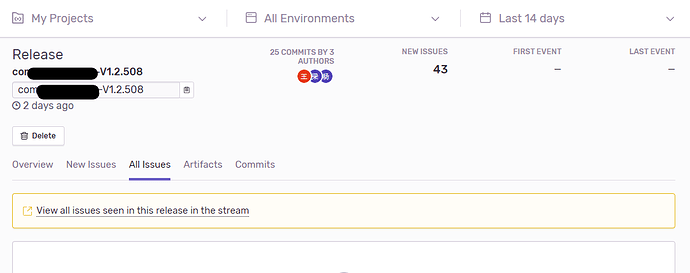

I today synchronize the docker-compose.yml with the latest version, and re-run install.sh to have all docker images updated to version Sentry 10.1.0.dev04c241f8 , then I found the ghost issue number increased to 43, as following:

I wonder if these are not actually related to the release but something else, as our Sentry system is not put in usage yet?

A more detail history

Today, when I first logged in, I found following warning:

with this information, I checked and found that docker container snuba-consumer is not in health. So I checked the docker container logs, found following repeating errors:

- ‘[’ consumer = bash ‘]’

- ‘[’ c = - ‘]’

- ‘[’ consumer = api ‘]’

- snuba consumer --help

- set – snuba consumer --auto-offset-reset=latest --max-batch-time-ms 750

- exec gosu snuba snuba consumer --auto-offset-reset=latest --max-batch-time-ms 750

2020-02-19 00:40:13,518 New partitions assigned: {Partition(topic=Topic(name=‘events’), index=0): 6120}

Traceback (most recent call last):

File “/usr/local/bin/snuba”, line 11, in

load_entry_point(‘snuba’, ‘console_scripts’, ‘snuba’)()

File “/usr/local/lib/python3.7/site-packages/click/core.py”, line 722, in call

return self.main(*args, **kwargs)

File “/usr/local/lib/python3.7/site-packages/click/core.py”, line 697, in main

rv = self.invoke(ctx)

File “/usr/local/lib/python3.7/site-packages/click/core.py”, line 1066, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File “/usr/local/lib/python3.7/site-packages/click/core.py”, line 895, in invoke

return ctx.invoke(self.callback, **ctx.params)

File “/usr/local/lib/python3.7/site-packages/click/core.py”, line 535, in invoke

return callback(*args, **kwargs)

File “/usr/src/snuba/snuba/cli/consumer.py”, line 156, in consumer

consumer.run()

File “/usr/src/snuba/snuba/utils/streams/batching.py”, line 137, in run

self._run_once()

File “/usr/src/snuba/snuba/utils/streams/batching.py”, line 145, in _run_once

msg = self.consumer.poll(timeout=1.0)

File “/usr/src/snuba/snuba/utils/streams/kafka.py”, line 688, in poll

return super().poll(timeout)

File “/usr/src/snuba/snuba/utils/streams/kafka.py”, line 402, in poll

raise ConsumerError(str(error))

snuba.utils.streams.consumer.ConsumerError: KafkaError{code=OFFSET_OUT_OF_RANGE,val=1,str=“Broker: Offset out of range”}

and for the container worker, I have following error

13:07:00 [INFO] sentry.tasks.update_user_reports: update_user_reports.records_updated (reports_with_event=0 updated_reports=0 reports_to_update=0)

13:22:00 [INFO] sentry.tasks.update_user_reports: update_user_reports.records_updated (reports_with_event=0 updated_reports=0 reports_to_update=0)

13:37:00 [INFO] sentry.tasks.update_user_reports: update_user_reports.records_updated (reports_with_event=0 updated_reports=0 reports_to_update=0)

13:52:00 [INFO] sentry.tasks.update_user_reports: update_user_reports.records_updated (reports_with_event=0 updated_reports=0 reports_to_update=0)

14:07:00 [INFO] sentry.tasks.update_user_reports: update_user_reports.records_updated (reports_with_event=0 updated_reports=0 reports_to_update=0)

%3|1582035722.365|FAIL|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035722.365|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035722.365|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035760.426|FAIL|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035760.426|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035760.426|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035760.426|FAIL|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035760.426|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035760.426|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035760.645|FAIL|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035760.645|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035760.645|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035760.646|FAIL|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035760.646|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035760.646|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035789.566|FAIL|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035789.566|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035789.566|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035802.598|FAIL|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035802.598|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035802.598|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035802.598|FAIL|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035802.598|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035802.598|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035803.530|FAIL|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035803.530|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035803.530|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035803.530|FAIL|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035803.530|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035803.530|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035806.556|FAIL|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035806.556|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035806.556|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035806.556|FAIL|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035806.556|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035806.556|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035856.996|FAIL|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035856.996|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035856.996|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035856.996|FAIL|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035856.996|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035856.996|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035950.159|FAIL|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035950.159|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035950.159|ERROR|rdkafka#producer-1| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

%3|1582035950.159|FAIL|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035950.159|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: kafka:9092/1001: Receive failed: Connection reset by peer

%3|1582035950.159|ERROR|rdkafka#producer-2| [thrd:kafka:9092/bootstrap]: 1/1 brokers are down

So I ran the install.sh again, but failed at docker image build ( I need to import our own certificate and enable the Microsoft Teams Integration, which has been successful before):

Digest: sha256:89942569b877ffc31fdcee940c33e61f8a4ecb281b2ac6e8fb2cd73bcd6053a8

Status: Downloaded newer image for getsentry/sentry:latest

docker.io/getsentry/sentry:latest

Building web

Step 1/10 : ARG SENTRY_IMAGE

Step 2/10 : FROM ${SENTRY_IMAGE:-getsentry/sentry:latest}

—> 539deabae453

Step 3/10 : COPY cert/*.crt /usr/local/share/ca-certificates/

—> 6b7df71f5391

Step 4/10 : RUN update-ca-certificates

—> Running in 61aa3dc5f199

Updating certificates in /etc/ssl/certs…

1 added, 0 removed; done.

Running hooks in /etc/ca-certificates/update.d…

done.

Removing intermediate container 61aa3dc5f199

—> 4bf467b7be97

Step 5/10 : WORKDIR /usr/src/sentry

—> Running in ca3427a54b52

Removing intermediate container ca3427a54b52

—> ac17bb629146

Step 6/10 : ENV PYTHONPATH /usr/src/sentry

—> Running in 4e68634711cf

Removing intermediate container 4e68634711cf

—> f2a21559c5df

Step 7/10 : COPY . /usr/src/sentry

—> 9234103b513a

Step 8/10 : RUN if [ -s requirements.txt ]; then pip install -r requirements.txt; fi

—> Running in 7e91db5c1d84

DEPRECATION: Python 2.7 will reach the end of its life on January 1st, 2020. Please upgrade your Python as Python 2.7 won’t be maintained after that date. A future version of pip will drop support for Python 2.7. More details about Python 2 support in pip, can be found at Release process - pip documentation v26.0.dev0

Requirement already satisfied: redis-py-cluster==1.3.4 in /usr/local/lib/python2.7/site-packages (from -r requirements.txt (line 2)) (1.3.4)

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.VerifiedHTTPSConnection object at 0x7f74ab2170d0>: Failed to establish a new connection: [Errno -3] Temporary failure in name resolution’,)‘: /simple/sentry-msteams/

WARNING: Retrying (Retry(total=3, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.VerifiedHTTPSConnection object at 0x7f74ab217a10>: Failed to establish a new connection: [Errno -3] Temporary failure in name resolution’,)‘: /simple/sentry-msteams/

WARNING: Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.VerifiedHTTPSConnection object at 0x7f74ab2178d0>: Failed to establish a new connection: [Errno -3] Temporary failure in name resolution’,)‘: /simple/sentry-msteams/

WARNING: Retrying (Retry(total=1, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.VerifiedHTTPSConnection object at 0x7f74ab217b50>: Failed to establish a new connection: [Errno -3] Temporary failure in name resolution’,)‘: /simple/sentry-msteams/

WARNING: Retrying (Retry(total=0, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.VerifiedHTTPSConnection object at 0x7f74ab217f50>: Failed to establish a new connection: [Errno -3] Temporary failure in name resolution’,)': /simple/sentry-msteams/

ERROR: Could not find a version that satisfies the requirement sentry-msteams (from -r requirements.txt (line 3)) (from versions: none)

ERROR: No matching distribution found for sentry-msteams (from -r requirements.txt (line 3))

Removing intermediate container 7e91db5c1d84

Service ‘web’ failed to build: The command ‘/bin/sh -c if [ -s requirements.txt ]; then pip install -r requirements.txt; fi’ returned a non-zero code: 1

Cleaning up…

To focus on the problem, I removed Microsoft-Team integration, synchronized the docker-compose.yml (my previous one is using an old Clickhouse image), and re-run the install.sh.

Now all docker is running in health, but the ghost issue number increases.

the logs for the worker container is:

[root@iHandle_DB2 sentry.10]# docker logs bd0de2231e9e

01:44:58 [WARNING] sentry.utils.geo: settings.GEOIP_PATH_MMDB not configured.

01:45:02 [INFO] sentry.plugins.github: apps-not-configured

01:45:02 [INFO] sentry.bgtasks: bgtask.spawn (task_name=u’sentry.bgtasks.clean_dsymcache:clean_dsymcache’)

01:45:02 [INFO] sentry.bgtasks: bgtask.spawn (task_name=u’sentry.bgtasks.clean_releasefilecache:clean_releasefilecache’)

-------------- celery@bd0de2231e9e v3.1.18 (Cipater)

---- **** -----

— * *** * – Linux-3.10.0-1062.12.1.el7.x86_64-x86_64-with-debian-10.1

– * - **** —

- ** ---------- [config]

- ** ---------- .> app: sentry:0x7f19cab58090

- ** ---------- .> transport: redis://redis:6379/0

- ** ---------- .> results: disabled

- *** — * — .> concurrency: 8 (prefork)

– ******* ----

— ***** ----- [queues]

-------------- .> activity.notify exchange=default(direct) key=activity.notify

.> alerts exchange=default(direct) key=alerts

.> app_platform exchange=default(direct) key=app_platform

.> assemble exchange=default(direct) key=assemble

.> auth exchange=default(direct) key=auth

.> buffers.process_pending exchange=default(direct) key=buffers.process_pending

.> cleanup exchange=default(direct) key=cleanup

.> commits exchange=default(direct) key=commits

.> counters-0 exchange=counters(direct) key=

.> data_export exchange=default(direct) key=data_export

.> default exchange=default(direct) key=default

.> digests.delivery exchange=default(direct) key=digests.delivery

.> digests.scheduling exchange=default(direct) key=digests.scheduling

.> email exchange=default(direct) key=email

.> events.preprocess_event exchange=default(direct) key=events.preprocess_event

.> events.process_event exchange=default(direct) key=events.process_event

.> events.reprocess_events exchange=default(direct) key=events.reprocess_events

.> events.reprocessing.preprocess_event exchange=default(direct) key=events.reprocessing.preprocess_event

.> events.reprocessing.process_event exchange=default(direct) key=events.reprocessing.process_event

.> events.save_event exchange=default(direct) key=events.save_event

.> files.delete exchange=default(direct) key=files.delete

.> incidents exchange=default(direct) key=incidents

.> integrations exchange=default(direct) key=integrations

.> merge exchange=default(direct) key=merge

.> options exchange=default(direct) key=options

.> relay_config exchange=default(direct) key=relay_config

.> reports.deliver exchange=default(direct) key=reports.deliver

.> reports.prepare exchange=default(direct) key=reports.prepare

.> search exchange=default(direct) key=search

.> sleep exchange=default(direct) key=sleep

.> stats exchange=default(direct) key=stats

.> triggers-0 exchange=triggers(direct) key=

.> unmerge exchange=default(direct) key=unmerge

.> update exchange=default(direct) key=update

What should be my next step to investigate?